```json { "title": "Slash Your GPU Cloud Costs by 50% for ML & AI Workloads", "meta_title": "Reduce GPU Cloud Costs by 50% for ML & AI | Expert Guide", "meta_description": "Cut your GPU cloud computing expenses by half with expert strategies for ML engineers and data scientists. Learn about cost-effective GPUs, providers, and optimization tips.", "intro": "High GPU cloud computing costs can quickly erode your machine learning and AI project budgets. For ML engineers and data scientists, optimizing these expenses is not just about saving money, it's about enabling more experimentation, faster iteration, and ultimately, more successful outcomes. This comprehensive guide reveals actionable strategies to reduce your GPU cloud costs by 50% or more, without compromising performance.", "content": "

The High Cost of Cloud GPUs: Why Optimization is Crucial

\nThe demand for powerful GPUs to train and deploy complex AI models has skyrocketed, leading to increased competition and, often, higher prices in the cloud. Specialized hardware, significant power consumption, and the intricate infrastructure required to support these accelerators contribute to their premium cost. For organizations and individuals pushing the boundaries of machine learning, deep learning, and generative AI, uncontrolled GPU expenses can become a major bottleneck, limiting innovation and scalability.

\nBy strategically optimizing your GPU cloud usage, you can unlock substantial savings, allowing you to allocate resources more efficiently, expand your research, and maintain a competitive edge. Achieving a 50% reduction in your GPU cloud bill is an ambitious yet entirely attainable goal with the right approach.

\n\nStep-by-Step Strategies to Slash Your GPU Cloud Bill

\n\n1. Right-Sizing Your GPU Instance: The Foundation of Savings

\nOne of the most common mistakes is over-provisioning – renting GPUs far more powerful or with more VRAM than your workload truly requires. Understanding the specific demands of your task is the first and most critical step in reducing GPU expenses.

\n- \n

- For Model Training: \n

- Small Models / Fine-tuning (e.g., ResNet, smaller BERT variants, LoRA fine-tuning for LLMs): Often, consumer-grade GPUs like the NVIDIA RTX 3090 (24GB) or RTX 4090 (24GB) offer an excellent performance-to-cost ratio. They can be found on decentralized clouds like RunPod or Vast.ai for as low as $0.30 - $0.80 per hour. \n

- Medium Models / Complex Tasks (e.g., larger Transformers, mid-sized diffusion models): The NVIDIA A100 (40GB or 80GB) is the industry standard for its balance of Tensor Core performance and high VRAM. Expect prices ranging from $1.50 - $3.50 per hour, depending on the provider and instance type. \n

- Large-scale Distributed Training (e.g., training foundation models from scratch, multi-billion parameter LLMs): This typically requires multiple A100s or the cutting-edge NVIDIA H100 (80GB). While expensive on a per-hour basis, the speedup can reduce total training time, indirectly lowering overall costs. \n

- For LLM Inference (e.g., Llama 2 7B, 70B, Mixtral): \n

- 7B-13B Models: A single RTX 3090/4090 or an A100 40GB can handle these efficiently, especially with quantization. \n

- 34B-70B Models: An A100 80GB is often ideal, providing enough VRAM and compute for responsive inference. \n

- 100B+ Models: May require multiple A100 80GBs or H100s, potentially with model parallelism. \n

- For Stable Diffusion / Generative AI: \n

- The RTX 3090 and RTX 4090 are exceptionally well-suited. Their high VRAM and strong performance with consumer-oriented frameworks make them highly cost-effective for image generation, video synthesis, and similar tasks. \n

- \n

- \n

- \n

Always profile your workload on a smaller instance first to determine its actual GPU, CPU, and memory requirements before committing to an expensive, oversized setup.

\n\n2. Leveraging Spot/Preemptible Instances for Unbeatable Prices

\nSpot instances (AWS EC2 Spot, Google Cloud Preemptible VMs, Azure Spot VMs, RunPod Spot, Vast.ai) offer deep discounts – often 70-90% off on-demand prices – in exchange for the risk of interruption. This strategy is a game-changer for reducing GPU expenses.

\n- \n

- Ideal Use Cases: \n

- Fault-Tolerant Training: Implement robust checkpointing so your model can resume training from the last saved state after an interruption. \n

- Hyperparameter Tuning: Running many independent experiments, where the failure of one doesn't stop the others. \n

- Batch Processing / Data Preprocessing: Workloads that can be easily restarted or distributed. \n

- Non-Critical Inference: If your inference pipeline can tolerate occasional downtime. \n

- Providers: All major hyperscalers offer spot instances. Decentralized clouds like Vast.ai and RunPod essentially operate on a spot-like marketplace model, where prices fluctuate based on demand and availability, often delivering even steeper discounts. \n

- \n

By designing your ML workflows to be resilient to interruptions, you can harness these significant savings.

\n\n3. Optimize Your Code and Frameworks for GPU Efficiency

\nHardware choice is only half the battle; software optimization is equally crucial for maximizing GPU utilization and minimizing runtime, directly impacting your cloud bill.

\n- \n

- Batch Size Tuning: Larger batch sizes generally lead to better GPU utilization, as the GPU processes more data in parallel. However, this is limited by VRAM. Experiment to find the largest batch size that fits your GPU's memory without causing out-of-memory errors. \n

- Mixed Precision Training (FP16/BF16): Modern GPUs (NVIDIA Ampere and Hopper architectures like A100, H100, and RTX 30/40 series) excel at half-precision (FP16 or BF16) computations. Using mixed precision can significantly reduce memory usage (allowing larger batch sizes) and speed up training by 2-3x, leading to much faster job completion and lower costs. PyTorch's

torch.cuda.ampand TensorFlow's mixed precision policies make this easy to implement. \n - Gradient Accumulation: If your GPU's VRAM limits your effective batch size, gradient accumulation allows you to simulate larger batch sizes by accumulating gradients over several mini-batches before performing a single weight update. This can achieve similar training dynamics to a larger batch size without requiring more VRAM. \n

- Efficient Data Loading: Ensure your data pipeline isn't bottlenecking your GPU. Use multiprocessing data loaders (e.g., PyTorch

DataLoaderwithnum_workers > 0), prefetching, and fast storage to keep the GPU fed with data, preventing idle time. \n - Framework Optimizations: Leverage built-in optimizations like PyTorch's

torch.compile()or TensorFlow's XLA (Accelerated Linear Algebra) compiler to automatically optimize your model graphs for better GPU performance. \n - Quantization (for Inference): For deploying models, quantizing weights (e.g., from FP32 to INT8 or FP16) can dramatically reduce model size, memory footprint, and inference latency, allowing you to use smaller, cheaper GPUs or serve more requests per GPU. \n

4. Strategic Provider Selection: Dedicated vs. Decentralized Clouds

\nThe choice of cloud provider can have a monumental impact on your GPU cloud costs. Different providers offer varying pricing models, hardware availability, and service levels.

\n- \n

- Decentralized GPU Clouds (e.g., RunPod, Vast.ai, Akash Network):\n

- \n

- Pros: Often the cheapest option, sometimes 2-5x cheaper than hyperscalers for equivalent hardware. Access to a wide range of consumer-grade GPUs (RTX 3090, 4090) and increasingly, enterprise-grade GPUs (A100, H100). Ideal for cost-sensitive projects and burst workloads. \n

- Cons: Can have less consistent uptime, varying hardware quality (though reputable platforms vet hosts), and more basic support. Best for flexible, less mission-critical tasks where you can tolerate some variability. \n

- Pricing Examples: A100 80GB on Vast.ai can be found for $1.20 - $2.00/hr (spot market), RunPod typically offers A100 80GB from $1.50 - $2.00/hr. \n

\n - Specialized GPU Clouds (e.g., Lambda Labs, CoreWeave, Paperspace):\n

- \n

- Pros: Focus on deep learning infrastructure, offering enterprise-grade hardware (A100, H100) with optimized networks and robust support. Often more competitive than general-purpose hyperscalers for raw GPU compute. \n

- Cons: Still more expensive than decentralized options, and may have fewer integrated services compared to hyperscalers. \n

- Pricing Examples: Lambda Labs offers A100 80GB for around $2.10 - $2.50/hr. \n

\n - Hyperscale Clouds (e.g., AWS, GCP, Azure, Vultr):\n

- \n

- Pros: Unmatched ecosystem of integrated services, global reach, high reliability, and enterprise-grade support. Best for complex, integrated workflows requiring a broad suite of cloud services. \n

- Cons: Generally the most expensive for raw GPU compute, especially for A100/H100 on-demand instances. Requires diligent use of spot instances and reservations to manage costs. \n

- Pricing Examples: AWS EC2 P4d instances with A100 80GB can cost $3.50 - $4.50/hr on-demand. Vultr offers a more competitive price for A100 80GB, typically $2.50 - $3.50/hr. \n

\n

5. Efficient Resource Management and Automation

\nIdle GPUs are wasted money. Implementing robust resource management and automation is paramount to keeping your cloud GPU expenses in check.

\n- \n

- Automated Shutdowns: Implement scripts or use cloud provider features to automatically shut down GPU instances after a training job completes, after a period of inactivity, or outside of working hours. Many platforms allow you to define lifecycle rules for instances. \n

- Orchestration Tools: For complex workflows, use Kubernetes (K8s) with GPU scheduling, Slurm, or managed ML platforms that can intelligently allocate and deallocate GPU resources based on demand. This ensures GPUs are only active when needed. \n

- Monitoring: Regularly monitor GPU utilization (e.g., using

nvidia-smior cloud provider metrics) to identify underutilized instances. If a high-end GPU is consistently running at low utilization, it's a clear sign of over-provisioning. \n - Containerization (Docker): Use Docker or similar containerization technologies to package your environments. This ensures fast, reproducible setup times, reducing the billed time spent on environment configuration. \n

6. Data Management and Transfer Costs

\nHidden costs, particularly data ingress/egress fees, can surprise even experienced cloud users. Efficient data management can significantly contribute to overall cloud GPU savings.

\n- \n

- Data Locality: Store your training data in the same region and, ideally, the same availability zone as your GPU instances. Inter-region and inter-zone data transfers incur costs. \n

- Compression: Compress large datasets before transferring them to reduce transfer volumes and associated costs. \n

- Caching: For frequently accessed datasets, implement caching mechanisms (e.g., local SSDs on GPU instances) to minimize repeated downloads and egress charges. \n

- Provider-Specific Storage: Utilize object storage services (e.g., AWS S3, GCP Cloud Storage, Azure Blob Storage) within the same cloud provider where your GPUs reside, as egress fees within the same provider are often significantly lower or free. \n

Specific GPU Models for Cost-Effective AI Workloads

\nChoosing the right GPU is a balance between performance, VRAM, and price. Here are some top recommendations:

\n\nConsumer-Grade Powerhouses for Budget-Conscious ML

\n- \n

- NVIDIA RTX 3090 (24GB VRAM): A phenomenal value GPU, especially on decentralized clouds. With 24GB of GDDR6X VRAM, it's excellent for Stable Diffusion, smaller LLM fine-tuning, general ML development, and even some medium-scale model training. It offers a great VRAM/price ratio for tasks that don't absolutely require TensorFloat32 or HBM2e. \n

- NVIDIA RTX 4090 (24GB VRAM): The current king of consumer GPUs. Faster than the RTX 3090, especially with FP16, and also boasts 24GB of VRAM. Ideal for similar tasks as the 3090 but with higher performance ceilings. If available at a reasonable cloud price, it's a top choice for maximizing performance per dollar on non-enterprise workloads. \n

Enterprise-Grade Value for Serious Training and Inference

\n- \n

- NVIDIA A100 (40GB/80GB VRAM): The workhorse of modern AI. The A100 offers unparalleled performance for large model training, multi-GPU setups, and demanding inference tasks. Its Tensor Cores, high memory bandwidth (HBM2/HBM2e), and support for TensorFloat32 make it indispensable for serious AI research and production. The 80GB version is crucial for very large models. \n

- NVIDIA H100 (80GB VRAM): The successor to the A100, offering even greater performance, especially for transformer models and large language models. While premium-priced, its increased speed can drastically reduce training times for cutting-edge models, potentially leading to overall cost savings if time-to-solution is critical. \n

Provider Deep Dive: Where to Find the Best Deals

\nThe landscape of GPU cloud providers is diverse. Here's a breakdown to help you choose wisely:

\n\nDecentralized GPU Clouds: The Cost Leaders

\n- \n

- Vast.ai: A marketplace connecting users with idle GPUs globally. Offers highly variable pricing, often the cheapest for spot instances. You can find A100 80GB instances for as low as $1.20 - $2.00/hr, and RTX 4090s for $0.30 - $0.60/hr. Requires careful selection of hosts and robust fault tolerance for critical jobs. \n

- RunPod: Similar to Vast.ai but often with a more curated and user-friendly experience. Provides access to both consumer (RTX 3090, 4090) and enterprise (A100, H100) GPUs. A100 80GB instances typically range from $1.50 - $2.00/hr. Excellent for both training and inference due to competitive pricing and good uptime. \n

- Akash Network: A blockchain-based decentralized cloud that aims to be censorship-resistant and highly cost-effective. Still maturing but offers promising potential for future savings. \n

Specialized ML Clouds: Performance & Support for Less

\n- \n

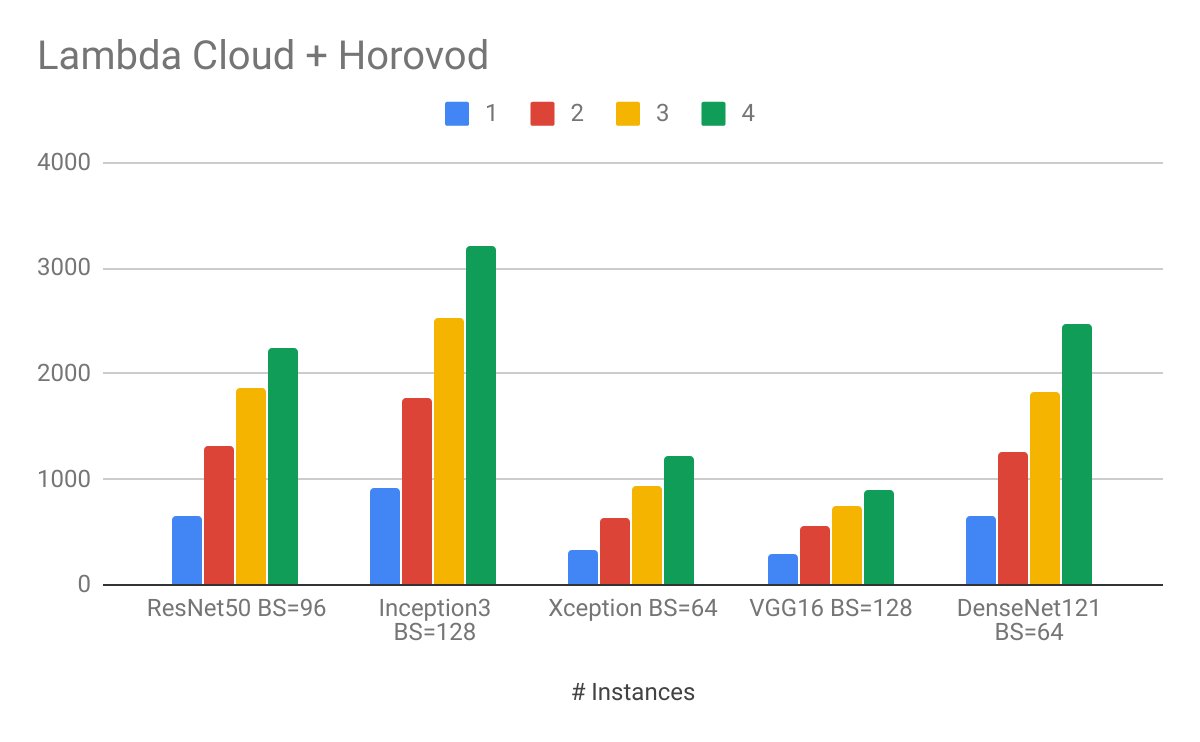

- Lambda Labs: Focuses exclusively on deep learning infrastructure, providing bare metal and cloud GPU instances with competitive pricing for A100s and H100s. A100 80GB instances are typically around $2.10 - $2.50/hr. Known for excellent support and an environment optimized for ML. \n

- CoreWeave: Built on NVIDIA GPUs, offering highly optimized infrastructure for AI and visual effects. Provides A100 and H100 instances at competitive rates, often undercutting hyperscalers. \n

- Paperspace (Gradient): Offers a managed ML platform with integrated GPU access. Good for teams looking for a streamlined development experience, with competitive pricing for various GPU types. \n

Hyperscalers & Traditional Clouds: Ecosystem & Reliability

\n- \n

- Vultr: A general-purpose cloud provider that has become surprisingly competitive in the GPU space, offering A100 80GB instances often for $2.50 - $3.50/hr. It's a strong contender for those seeking a balance of cost and reliability outside the major hyperscalers. \n

- AWS (EC2), Google Cloud (Compute Engine), Azure (VMs): These providers offer the most comprehensive ecosystems, global presence, and robust enterprise support. While their on-demand GPU prices (e.g., AWS A100 80GB at $3.50 - $4.50/hr) are generally higher, their spot instances can offer substantial discounts (up to 70-90%). Best for projects requiring deep integration with other cloud services, strict SLAs, or complex networking. \n

Common Pitfalls to Avoid When Optimizing GPU Costs

\nWhile chasing the lowest price is tempting, it's crucial to avoid common missteps that can negate your savings or introduce new problems.

\n- \n

- Underestimating Data Transfer Costs: Egress fees can be significant, especially when moving large datasets between regions or out of the cloud. Factor these into your total cost of ownership. \n

- Ignoring GPU Utilization: An idle high-end GPU is a constant drain on your budget. Don't just provision and forget; actively monitor and manage your instances. \n

- Choosing the Wrong GPU: Both over-provisioning (paying for more power than you need) and under-provisioning (leading to longer job times or failures) are costly. Right-sizing is key. \n

- Lack of Automation: Manually starting and stopping instances is prone to human error. Forgotten running instances are a major source of wasted spending. \n

- Overlooking Software Optimizations: Relying solely on hardware upgrades without optimizing your code is leaving significant performance and cost savings on the table. \n

- Vendor Lock-in: While convenient, becoming too reliant on proprietary services of a single cloud provider can make it difficult and costly to switch if better deals emerge elsewhere. \n

- Ignoring Spot Instance Interruptions: Using spot instances without implementing proper checkpointing and fault tolerance is a recipe for lost work and frustration. \n