The Challenge of Affordable LLM Fine-Tuning

Fine-tuning LLMs requires significant GPU power and memory. Models like Llama 2, GPT-3, and others demand substantial resources, leading to high costs when using traditional cloud providers. This guide focuses on leveraging specialized GPU cloud providers and smart optimization techniques to drastically reduce these costs.

Step-by-Step Guide to Cost-Effective LLM Fine-Tuning

- Choose the Right GPU: Selecting the appropriate GPU is crucial. Newer, more powerful GPUs are often more cost-effective per training hour than older ones, even if their hourly rate is higher.

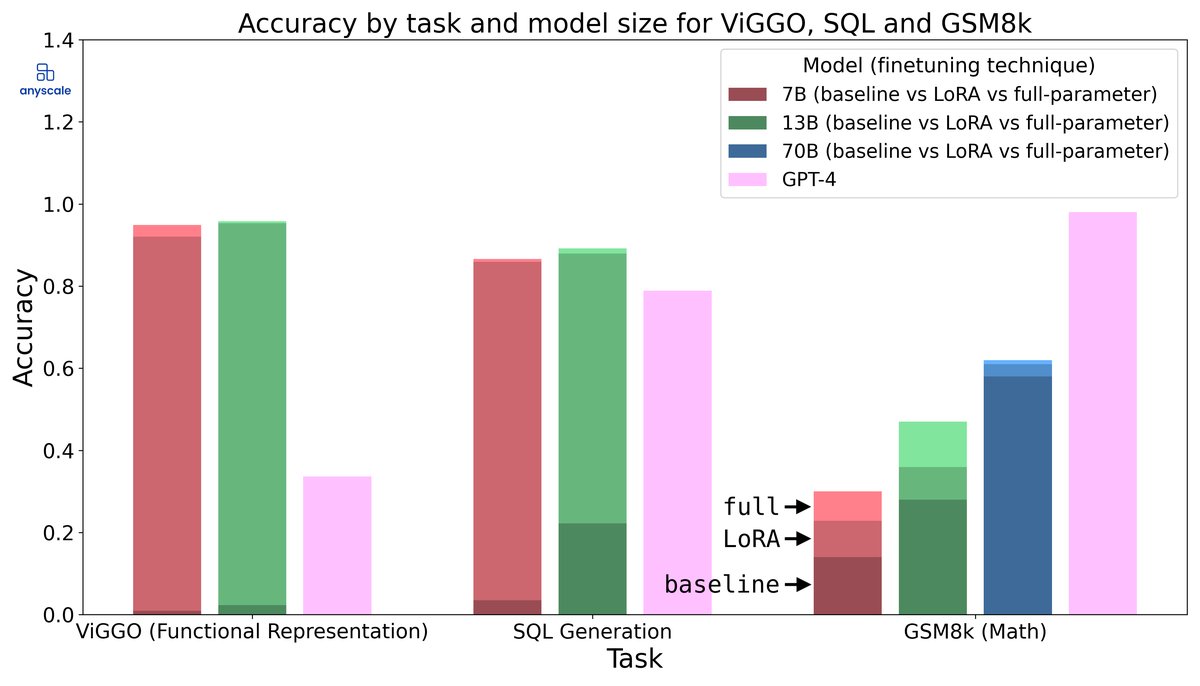

- Optimize Your Fine-Tuning Process: Techniques like quantization, LoRA (Low-Rank Adaptation), and other parameter-efficient fine-tuning methods can significantly reduce memory requirements and training time.

- Select the Right Cloud Provider: Specialized GPU cloud providers often offer significantly lower prices than traditional cloud providers like AWS, Azure, and GCP.

- Utilize Spot Instances/Interruptible Instances: These offer substantial discounts but come with the risk of interruption. However, for fine-tuning, checkpoints can mitigate this risk.

- Monitor and Optimize Resource Usage: Continuously monitor GPU utilization, memory usage, and network bandwidth to identify and eliminate bottlenecks.

GPU Recommendations for LLM Fine-Tuning

High-End GPUs (For Large Models and Complex Tasks)

- NVIDIA A100: A workhorse for LLM training and fine-tuning. Offers excellent performance and memory capacity (40GB or 80GB).

- NVIDIA H100: The latest generation, offering even higher performance than the A100, but also more expensive.

Mid-Range GPUs (For Smaller Models and Moderate Tasks)

- NVIDIA RTX 3090: A powerful consumer-grade GPU with 24GB of VRAM, making it suitable for fine-tuning smaller LLMs or using LoRA on larger models.

- NVIDIA RTX 4090: Even more powerful than the 3090, with similar VRAM, and often a better price-performance ratio.

- NVIDIA A40: Offers similar performance to the RTX 3090 but with a more robust server-grade design.

Budget-Friendly GPUs (For Experimentation and Small-Scale Fine-Tuning)

- NVIDIA RTX 3060: A good entry-level option with 12GB of VRAM, suitable for experimenting with smaller models or using techniques like quantization.

Cost Optimization Techniques

Parameter-Efficient Fine-Tuning (PEFT)

PEFT techniques, such as LoRA, adapt a pre-trained LLM to a specific task by training only a small number of parameters. This significantly reduces memory requirements and training time.

Quantization

Quantization reduces the precision of the model's weights, reducing memory footprint and accelerating computation. Techniques like 8-bit or 4-bit quantization can be used with minimal performance impact.

Mixed Precision Training

Using mixed precision training (e.g., using bfloat16 or float16) can significantly speed up training and reduce memory usage compared to full precision (float32).

Data Optimization

Ensure your dataset is efficiently loaded and processed. Use optimized data loaders and consider techniques like data sharding to distribute the data across multiple GPUs.

Gradient Accumulation

If you have limited GPU memory, use gradient accumulation to simulate larger batch sizes. This can improve training stability and performance.

Cloud Provider Recommendations

RunPod

RunPod offers a wide range of GPUs at competitive prices. They specialize in providing on-demand GPU instances and allow you to rent directly from community members, often resulting in lower prices. Offers both on-demand and spot instances.

Pricing (Example): RTX 3090 from ~$0.50/hour, A100 from ~$3/hour

Vast.ai

Vast.ai is another excellent option for finding affordable GPU instances. They aggregate GPU resources from various providers and offer spot instances at highly competitive prices. Known for its price discovery mechanism which can lead to extremely low prices.

Pricing (Example): RTX 3090 from ~$0.30/hour, A100 from ~$2.50/hour (spot prices fluctuate)

Lambda Labs

Lambda Labs provides dedicated GPU servers and cloud instances, focusing on deep learning workloads. They offer pre-configured environments and excellent support for machine learning frameworks. More expensive than RunPod or Vast.ai but offers managed solutions.

Pricing (Example): A100 from ~$4/hour (dedicated instance)

Vultr

Vultr offers a more traditional cloud experience but has started offering GPU instances. Their pricing can be competitive, especially for longer-term commitments. A good option if you prefer a more established cloud provider.

Pricing (Example): A100 from ~$3.50/hour

Comparison Table

| Provider | GPU (A100) Price (Approx.) | Spot Instances | Ease of Use | Best For |

|---|---|---|---|---|

| RunPod | $3/hour | Yes | Moderate | Cost-conscious users, community rentals |

| Vast.ai | $2.50/hour (spot) | Yes (Spot only) | Moderate (Requires some technical knowledge) | Lowest prices, flexible configurations |

| Lambda Labs | $4/hour | No | Easy (Managed solutions) | Managed environments, dedicated servers |

| Vultr | $3.50/hour | No | Easy (Traditional Cloud) | Familiar cloud environment, longer-term commitments |

Common Pitfalls to Avoid

- Underestimating the required GPU memory: Carefully estimate the memory requirements of your model and dataset before selecting a GPU.

- Ignoring data transfer costs: Transferring large datasets can be expensive. Consider storing your data close to the GPU instance.

- Not using spot instances: Spot instances can save you a lot of money, but be prepared for interruptions. Implement checkpointing to mitigate this risk.

- Failing to monitor resource usage: Continuously monitor GPU utilization, memory usage, and network bandwidth to identify and eliminate bottlenecks.

- Overlooking software setup: Ensure your environment is properly configured with the necessary drivers, libraries, and frameworks. Use pre-built Docker images when available.

Real Use Cases

Stable Diffusion Fine-Tuning

Fine-tuning Stable Diffusion for specific styles or subjects can be done affordably using RTX 3090 or RTX 4090 GPUs on RunPod or Vast.ai. LoRA is a popular technique to reduce memory requirements.

LLM Inference

While this guide focuses on fine-tuning, the same principles apply to deploying LLMs for inference. Using quantized models and efficient inference engines can significantly reduce costs.

Model Training

Training LLMs from scratch is generally more expensive than fine-tuning, but the same cost optimization techniques apply. Consider using multiple GPUs in parallel to accelerate training.