How to Set Up a Reverse Proxy on a VPS?

In this detailed article, we, as experienced system administrators, will share knowledge on setting up a reverse proxy on your virtual private server (VPS hosting). A reverse proxy is a powerful tool that allows you to improve the security, performance, and manageability of your web applications. We will walk through the process of installing and configuring a reverse proxy using Nginx, one of the most popular and reliable web servers, which is often used as a reverse proxy. You will learn how to route traffic, configure SSL/TLS, cache content, and much more. This article will be useful for both beginners and experienced users looking to optimize their web servers. Let’s get started!Table of Contents:

- What is a reverse proxy and why do you need it?

- Choosing a Tool: Nginx

- Installing and Configuring Nginx

- Reverse Proxy Configuration

- Security: SSL/TLS

- Monitoring and Troubleshooting

What is a reverse proxy and why do you need it?

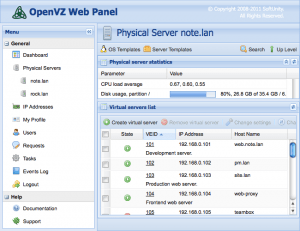

A reverse proxy is a server that acts as an intermediary between clients and one or more back-end servers. The client sends a request to the reverse proxy, which then forwards the request to the appropriate back-end server. The back-end server’s response is returned to the reverse proxy, which then sends it to the client. From the client’s point of view, it only interacts with the reverse proxy, without knowing about the existence of the back-end servers. This provides several important advantages.

- Security: A reverse proxy hides the structure of your internal network, not revealing the IP addresses of the back-end servers. This makes it difficult for attackers to obtain information about your infrastructure. It can also act as a filter, blocking malicious requests and protecting the back-end servers from DDoS attacks.

- Load balancing: A reverse proxy can distribute incoming traffic between multiple back-end servers, preventing one server from being overloaded and ensuring high availability of your application.

- Caching: A reverse proxy can cache static content, such as images, CSS, and JavaScript files, which reduces the load on the back-end servers and speeds up page loading for users.

- SSL/TLS encryption: A reverse proxy can handle SSL/TLS encryption, freeing back-end servers from this resource-intensive task. This also allows you to centrally manage SSL/TLS certificates.

- Simplified management: A reverse proxy simplifies the management of web applications, allowing you to change the configuration of the back-end servers without having to make changes on the client side.

Imagine you have an online store. You have several servers that handle user requests, process payments, and manage the database. Without a reverse proxy, each user connects directly to one of these servers. If one server is overloaded, this can lead to a slowdown of the site or even its unavailability. With a reverse proxy, all users connect to the reverse proxy server, which then distributes requests between the available servers. If one server fails, the reverse proxy automatically redirects requests to other servers, ensuring continuous operation of the site.

Example command to check server availability:

ping 192.168.1.10Example command to check network connections:

netstat -tulnpThis command will display a list of all active network connections on your server, including the protocol, local address, remote address, state, and PID of the process that is using the connection. This is useful for determining which ports are open and which processes are using them.

Example command to check routes:

route -nThis command will display the kernel routing table. This is useful for determining how traffic is routed from your server to other networks.

A reverse proxy is a key element of modern web infrastructure, allowing you to improve the security and performance of web applications.

John Doe, Senior System Administrator

Example: Suppose you have two web servers: webserver1.example.com (192.168.1.10) and webserver2.example.com (192.168.1.11). Your reverse proxy will have an external IP address 203.0.113.10. When a user tries to access www.example.com, the request first arrives at the reverse proxy (203.0.113.10), which then redirects it to one of the web servers (for example, webserver1.example.com). The user receives a response from webserver1.example.com, but from their point of view, they are communicating directly with www.example.com.

Choosing a Tool: Nginx

Nginx is a high-performance web server and reverse proxy known for its efficiency, stability, and flexibility. It can handle thousands of simultaneous connections and consumes relatively few resources. Nginx is an excellent choice for use as a reverse proxy for several reasons:

- High performance: Nginx is designed to handle a large number of concurrent connections with minimal latency.

- Flexibility: Nginx offers a wide range of settings and modules, allowing you to adapt it to various tasks.

- Ease of configuration: Nginx has a clear and logical configuration syntax.

- SSL/TLS support: Nginx provides excellent support for SSL/TLS encryption, allowing you to provide a secure connection between the client and the server.

- Caching: Nginx can cache static and dynamic content, which significantly improves the performance of web applications.

There are other alternatives, such as Apache, but Nginx often outperforms Apache, especially in scenarios with a large number of concurrent connections. In addition, Nginx configuration is often considered simpler and more understandable than Apache configuration.

| Feature | Nginx | Apache |

|---|---|---|

| Performance (concurrent connections) | High | Average |

| Resource usage | Low | High |

| Configuration | Relatively simple | More complex |

| SSL/TLS support | Excellent | Good |

| Caching | Excellent | Good (with modules) |

In this guide, we will use Nginx as a reverse proxy. Before you begin, make sure you have a VPS with a Linux operating system installed (such as Ubuntu or Debian) and access to the command line.

Example command to check Nginx version:

nginx -vThis command will output the installed Nginx version.

Example command to check Nginx configuration file syntax:

nginx -tThis command will check the syntax of your Nginx configuration file and report errors, if any. Always run this command after making changes to the configuration file.

Example command to restart Nginx:

systemctl restart nginxThis command will restart Nginx. This must be done after making changes to the configuration file to apply these changes.

Nginx is not just a web server, it’s a powerful tool for building a high-performance and reliable web infrastructure.

Jane Smith, DevOps Engineer

Example: You are planning to deploy a new web application. You can use Nginx as a reverse proxy to distribute traffic between multiple instances of your application, ensuring high availability and scalability. In case of increased traffic, you can simply add new instances of the application, and Nginx will automatically start distributing traffic to them as well.

Installing and Configuring Nginx

The process of installing Nginx depends on your operating system. Below are instructions for Ubuntu/Debian and CentOS/RHEL.

Installing Nginx on Ubuntu/Debian:

First, update the package list:

sudo apt updateThen install Nginx:

sudo apt install nginxAfter installation, start Nginx and configure it to start automatically when the system boots:

sudo systemctl start nginx

sudo systemctl enable nginxInstalling Nginx on CentOS/RHEL:

First, install the EPEL repository (if it is not already installed):

sudo yum install epel-releaseThen install Nginx:

sudo yum install nginxAfter installation, start Nginx and configure it to start automatically when the system boots:

sudo systemctl start nginx

sudo systemctl enable nginxAfter installation, check the status of Nginx to make sure it is running:

sudo systemctl status nginxExpected output:

● nginx.service - The Nginx HTTP and reverse proxy server

Loaded: loaded (/lib/systemd/system/nginx.service; enabled; vendor preset: enabled)

Active: active (running) since Tue 2023-10-27 10:00:00 UTC; 10s ago

Docs: man:nginx(8)

Main PID: 1234 (nginx)

Tasks: 2 (limit: 4915)

CGroup: /system.slice/nginx.service

└─1234 nginx: master process /usr/sbin/nginx -g daemon on; master_process on;

Oct 27 10:00:00 your-vps systemd[1]: Started The Nginx HTTP and reverse proxy server.

If Nginx is running, you should see «active (running)» in the output of the command.

The Nginx configuration file is usually located in /etc/nginx/nginx.conf. Sites (virtual hosts) are usually stored in the directory /etc/nginx/sites-available/, and symbolic links to active sites are created in the directory /etc/nginx/sites-enabled/.

Warning: Before making changes to the Nginx configuration file, make a backup copy of it. This will allow you to easily restore the configuration in case of problems.

Example: You have installed Nginx on your VPS. Now you need to configure it to serve a static site. You can create a new configuration file in /etc/nginx/sites-available/mysite and add the configuration for your site to it. Then you can create a symbolic link to this file in /etc/nginx/sites-enabled/ and restart Nginx to apply the changes.

Reverse Proxy Configuration

To configure Nginx as a reverse proxy, you need to create or modify the site configuration file. For example, you can create a new configuration file /etc/nginx/sites-available/example.com and add the following configuration to it:

server {

listen 80;

server_name example.com www.example.com;

location / {

proxy_pass http://192.168.1.100:8080;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}

In this example:

listen 80;specifies that Nginx will listen on port 80 (HTTP).server_name example.com www.example.com;specifies the domain names for which this site will be handled.proxy_pass http://192.168.1.100:8080;specifies the address of the back-end server to which requests will be redirected. In this case, this is a server with the IP address192.168.1.100, listening on port8080.- The

proxy_set_headerdirectives pass information about the client’s request to the back-end server. This is necessary so that the back-end server can correctly handle requests.

After creating the configuration file, create a symbolic link to it in the directory /etc/nginx/sites-enabled/:

sudo ln -s /etc/nginx/sites-available/example.com /etc/nginx/sites-enabled/example.comRemove the standard configuration file default, if it exists:

sudo rm /etc/nginx/sites-enabled/defaultCheck the syntax of the Nginx configuration file:

sudo nginx -tRestart Nginx to apply the changes:

sudo systemctl restart nginxNow, when a user visits example.com, the request will be redirected to the back-end server 192.168.1.100:8080.

Load balancing between multiple back-end servers:

To load balance between multiple back-end servers, you need to define a group of servers in the Nginx configuration file:

upstream backend {

server 192.168.1.100:8080;

server 192.168.1.101:8080;

}

server {

listen 80;

server_name example.com www.example.com;

location / {

proxy_pass http://backend;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}

In this example, Nginx will distribute traffic between the servers 192.168.1.100:8080 and 192.168.1.101:8080. Nginx uses the round-robin algorithm by default to distribute traffic. You can use other algorithms, such as least_conn (least number of connections) or ip_hash (based on the client’s IP address).

Tip: Use tools to monitor the load on the back-end servers. This will allow you to determine which servers are overloaded and add new servers to the group if necessary.

Official Nginx documentation about reverse proxy.

Example: You are developing a microservices architecture. You can use Nginx as a reverse proxy to route requests to different microservices depending on the URL. For example, requests to /api/users can be redirected to the user management microservice, and requests to /api/products — to the product management microservice.

Security: SSL/TLS

SSL/TLS encryption is necessary to ensure the security of the connection between the client and the reverse proxy. This protects data from interception and unauthorized access. The easiest way to get an SSL/TLS certificate is to use Let’s Encrypt, a free and automated certificate authority.

Installing Certbot (Let’s Encrypt):

On Ubuntu/Debian:

sudo apt install certbot python3-certbot-nginxOn CentOS/RHEL:

sudo yum install certbot python3-certbot-nginxGetting an SSL/TLS certificate using Certbot:

sudo certbot --nginx -d example.com -d www.example.comThis command will automatically obtain and install an SSL/TLS certificate for example.com and www.example.com. Certbot will also automatically configure Nginx to use the certificate.

After successfully obtaining the certificate, Certbot will offer to redirect HTTP traffic to HTTPS. It is recommended to choose this option for security reasons.

Your Nginx configuration file will be automatically updated to include SSL/TLS. Example configuration:

server {

listen 80;

server_name example.com www.example.com;

return 301 https://$host$request_uri;

}

server {

listen 443 ssl;

server_name example.com www.example.com;

ssl_certificate /etc/letsencrypt/live/example.com/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/example.com/privkey.pem;

include /etc/nginx/snippets/ssl-params.conf;

location / {

proxy_pass http://192.168.1.100:8080;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}

In this example:

- The first

serverblock redirects HTTP traffic (port 80) to HTTPS (port 443). - The second

serverblock listens on port 443 (HTTPS) and uses the SSL/TLS certificate located in/etc/letsencrypt/live/example.com/fullchain.pemand/etc/letsencrypt/live/example.com/privkey.pem. include /etc/nginx/snippets/ssl-params.conf;includes SSL/TLS parameters recommended by Certbot.

Certbot automatically configures automatic renewal of SSL/TLS certificates. You can check the renewal status using the command:

sudo certbot renew --dry-runThis command will check whether certificates need to be renewed and show the result. If everything is in order, you will see the message «Congratulations, all renewals succeeded».

Warning: Remember to regularly renew SSL/TLS certificates. Let’s Encrypt certificates are only valid for 90 days. Automatic renewal is the easiest way to ensure that your certificates are always valid.

Example: You want to provide a secure connection for your online store. You use Certbot to get an SSL/TLS certificate for your domain. After installing the certificate, all traffic between users and your store will be encrypted, which will protect users’ personal data, such as passwords and credit card information.

Monitoring and Troubleshooting

Monitoring and troubleshooting are important to ensure the stable operation of your reverse proxy. Below are some useful commands and tools.

Checking Nginx status:

sudo systemctl status nginxThis command shows the current status of Nginx, including information about whether it is running, when it was started, and what processes it is using.

Viewing Nginx logs:

Nginx logs are stored in the /var/log/nginx/ directory. The most important logs are access.log (access log) and error.log (error log).

sudo tail -f /var/log/nginx/access.log

sudo tail -f /var/log/nginx/error.logThese commands show the latest entries in the access and error logs in real time. This is useful for tracking problems and errors.

Using journalctl:

journalctl is a tool for viewing system logs. You can use it to view Nginx logs:

sudo journalctl -u nginx.serviceThis command will show all logs related to the Nginx service.

Checking the availability of back-end servers:

You can use ping or curl to check the availability of back-end servers:

ping 192.168.1.100

curl http://192.168.1.100:8080ping checks whether the server is available by IP address. curl sends an HTTP request to the server and checks whether it returns a response.

Checking Nginx configuration:

Always check the syntax of the Nginx configuration file before restarting it:

sudo nginx -tIf the configuration contains errors, Nginx will report it.

Tip: Use tools to monitor the performance of your reverse proxy, such as Grafana or Prometheus. This will allow you to track CPU load, memory usage, and traffic, and identify problems before they affect performance.

Example: You noticed that your website is running slowly. You check the Nginx logs and see a lot of 502 (Bad Gateway) errors. This indicates that the back-end servers are not responding to requests. You check the availability of the back-end servers using ping and curl and find that one of the servers is unavailable. You restart this server, and the problem is resolved.

In conclusion, setting up a reverse proxy on a VPS using Nginx is a powerful way to improve the security, performance, and manageability of your web applications. By following this guide, you can successfully configure a reverse proxy and ensure stable and reliable operation of your web server.

«`