How to Optimize Virtual Machine Performance: Focus on the Disk Subsystem

Virtual machines (VMs) have become an integral part of modern IT infrastructure. However, it’s not enough to simply deploy a VM – it’s critical to ensure its optimal performance. This article focuses on optimizing the disk subsystem of virtual machines, as it often becomes a bottleneck affecting application speed. We will explore various methods and tools to maximize the efficient use of disk resources and improve the responsiveness of your VMs.

Table of Contents

Choosing the Optimal Disk Type

- HDD (Hard Disk Drive): Suitable for storing large amounts of data where access speed is not critical. Examples: archives, backups, file servers with low load. Not recommended for databases, virtual machines performing intensive read/write operations. SSD servers (Solid State Drive): Significantly faster than HDDs, providing low latency and high I/O speed. Recommended for most virtual machines, databases, web servers where high performance is required.NVMe servers (Non-Volatile Memory express): The fastest type of drives, using the PCI Express interface, providing maximum bandwidth and minimal latency. Recommended for mission-critical applications that are demanding on the disk subsystem, such as high-load databases, real-time applications, virtualization with a large number of active VMs.

- Thick Provisioning: When creating a virtual disk, all the reserved space is immediately allocated on the physical storage. Provides predictable performance, as there is no need to dynamically allocate space during VM operation.

- Thin Provisioning: The virtual disk occupies only the space on the physical storage that is actually used. Allows you to save disk space, but can lead to reduced performance if dynamic disk expansion is required during VM operation. Recommended to use with caution, monitoring the fullness of the physical storage.

- In the vSphere Web Client, navigate to the virtual machine.

- Click «Edit Settings».

- In the «Virtual Hardware» section, select «Add New Device» -> «Hard Disk».

- Specify the disk size.

- In the «Virtual Disk Provisioning» section, select «Thick Provision Eager Zeroed» or «Thick Provision Lazy Zeroed». «Eager Zeroed» formats the entire disk immediately, which takes longer to create but provides higher performance in the future. «Lazy Zeroed» formats the disk as needed.

- Select Datastore located on SSD or NVMe for maximum performance.

- Save the changes.

- In the vSphere Web Client, navigate to the virtual machine.

- Go to the «Monitor» -> «Performance» tab.

- Select «Disk» from the «Chart Options» drop-down list.

- You can track metrics such as «Disk Latency», «Disk Read Rate», «Disk Write Rate» to assess the performance of the disk subsystem and identify potential problems. High latency usually indicates disk overload or an insufficiently fast disk type.

Host-Level Caching

- Reduced Latency: Caching data in RAM or SSD significantly reduces the time it takes to access data compared to traditional hard drives.

- Increased Throughput: Caching allows you to handle more read and write requests, increasing the overall throughput of the disk subsystem.

- Reduced Load on Disks: Caching reduces the number of read and write operations on physical disks, which extends their lifespan and reduces the likelihood of bottlenecks.

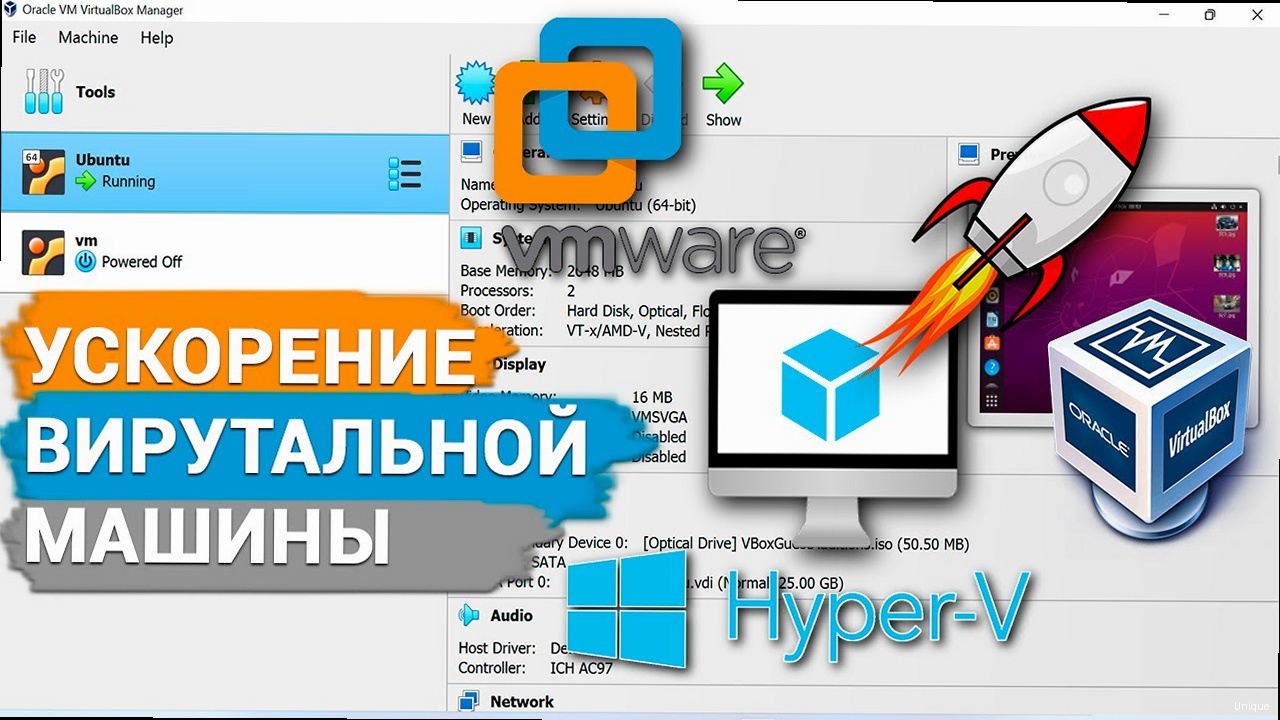

- VMware vSphere Flash Read Cache (vFRC): Uses the SSD of the host server to cache read operations of virtual machines.

- Microsoft Hyper-V Cache: Allows you to use the host server’s RAM to cache disk operations of virtual machines.

- Linux Cache: The Linux operating system uses RAM to cache disk operations.

- In the vSphere Web Client, navigate to the virtual machine.

- Click «Edit Settings».

- In the «Virtual Hardware» section, select the virtual disk for which you want to enable vFRC.

- Expand the «Disk Cache Configuration» section.

- Check the «Virtual Flash Read Cache» box.

- Specify the cache size (in MB or GB). The cache size depends on the amount of RAM and SSD available on the host server, as well as the performance requirements of the virtual machine. It is recommended to allocate enough space for frequently used data.

- Save the changes.

- In the vSphere Web Client, navigate to the virtual machine.

- Go to the «Monitor» -> «Performance» tab.

- Select «Disk» from the «Chart Options» drop-down list.

- Track metrics such as «vFlash Read Cache Hit Rate» and «vFlash Read Cache Read Latency» to assess the effectiveness of caching. A high cache hit rate and low read latency indicate that caching is working effectively.

esxcli storage vflash cache reset -v <vm_name>Tuning the Guest OS Disk System

Tuning the disk system of the guest operating system (OS) is an important step in optimizing the performance of a virtual machine. Properly configuring the disk subsystem parameters within the guest OS can significantly improve data read and write speeds, as well as reduce latency. This process includes file system optimization, disk parameter configuration, and the use of special tools to improve performance. Key Aspects of Tuning the Guest OS Disk System:- Partition Alignment: Proper partition alignment ensures that read and write operations are performed efficiently, without the need to read data located at the boundaries of physical disk blocks. Incorrect alignment can lead to a significant decrease in performance, especially on SSD disks.

- File System Selection: Different file systems have different characteristics and are suitable for different types of tasks. For example, XFS and ext4 are popular file systems for Linux, and NTFS is for Windows. Choosing the right file system can significantly affect the performance of the disk subsystem.

- File System Parameter Configuration: File systems have many parameters that can be configured to optimize performance. For example, you can change the block size, enable or disable journaling, and configure caching parameters.

- Disk Defragmentation: (only for file systems that require defragmentation, such as NTFS) Disk defragmentation allows you to organize files on the disk so that they are located sequentially. This reduces file access time and improves overall disk subsystem performance.

- Use the

fdisk -lorpartedcommand to view information about disk partitions and make sure they are aligned to the boundaries of physical disk blocks. For example:fdisk -l /dev/sda - If the partitions are not aligned, you can use

partedto recreate them with the correct alignment. Warning: recreating partitions will result in data loss, so you must first make a backup.

- When mounting the ext4 file system, you can use various options to optimize performance. For example, you can use the

noatimeoption to disable updating the file access time, which will reduce the number of write operations to the disk. - Edit the

/etc/fstabfile and add mount options. For example:/dev/sda1 /mnt/data ext4 defaults,noatime 0 0 - After editing the

/etc/fstabfile, run themount -acommand to apply the changes.

- The

tune2fsutility allows you to configure various parameters of the ext4 file system. For example, you can change the file system check interval:

This command disables periodic file system checks, which can improve performance, but increases the risk of file system corruption in the event of a failure. It is recommended to use with caution.tune2fs -i 0 /dev/sda1

Using I/O Scheduler

The I/O scheduler is an operating system component that manages the order in which requests to read and write data to disk are processed. Choosing and configuring the I/O scheduler can significantly affect the performance of the virtual machine’s disk subsystem, especially under high load. Different I/O schedulers have different scheduling algorithms and are suitable for different types of tasks. Main Types of I/O Schedulers:- CFQ (Completely Fair Queuing): Strives to ensure a fair distribution of disk subsystem resources between processes. Suitable for most scenarios, especially when multiple tasks are running on a virtual machine at the same time.

- Noop (No Operation): The simplest scheduler, which simply forwards requests to the disk in the order they are received. Suitable for use with SSD disks, where the data search delay is minimal.

- Deadline: Tries to fulfill requests within a specified time (deadline). Suitable for applications that require low latency, such as databases and real-time applications.

- Kyber: An improved scheduler designed for modern solid-state drives.

- For virtual machines working with SSD disks, it is recommended to use the Noop or Kyber scheduler.

- For virtual machines working with HDD disks and performing multiple tasks at the same time, it is recommended to use the CFQ scheduler.

- For virtual machines that require low latency, it is recommended to use the Deadline scheduler.

- Run the command:

This command will output a list of available schedulers, and the current scheduler will be indicated in square brackets. For example:cat /sys/block/sda/queue/schedulernoop deadline [cfq]

- Run the command:

This command will set theecho noop > /sys/block/sda/queue/schedulernoopscheduler for the/dev/sdadisk. Warning: this change will not be saved after rebooting.

- Create the file

/etc/udev/rules.d/60-scheduler.ruleswith the following content:

This udev rule will set theACTION=="add|change", KERNEL=="sda", ATTR{queue/scheduler}="noop"noopscheduler for the/dev/sdadisk when the system boots. Changesdato the name of your disk. - Reboot the system or run the

udevadm triggercommand to apply the changes.